Research

Design philosophy and research foundations behind DetectX verification systems.

Research Philosophy

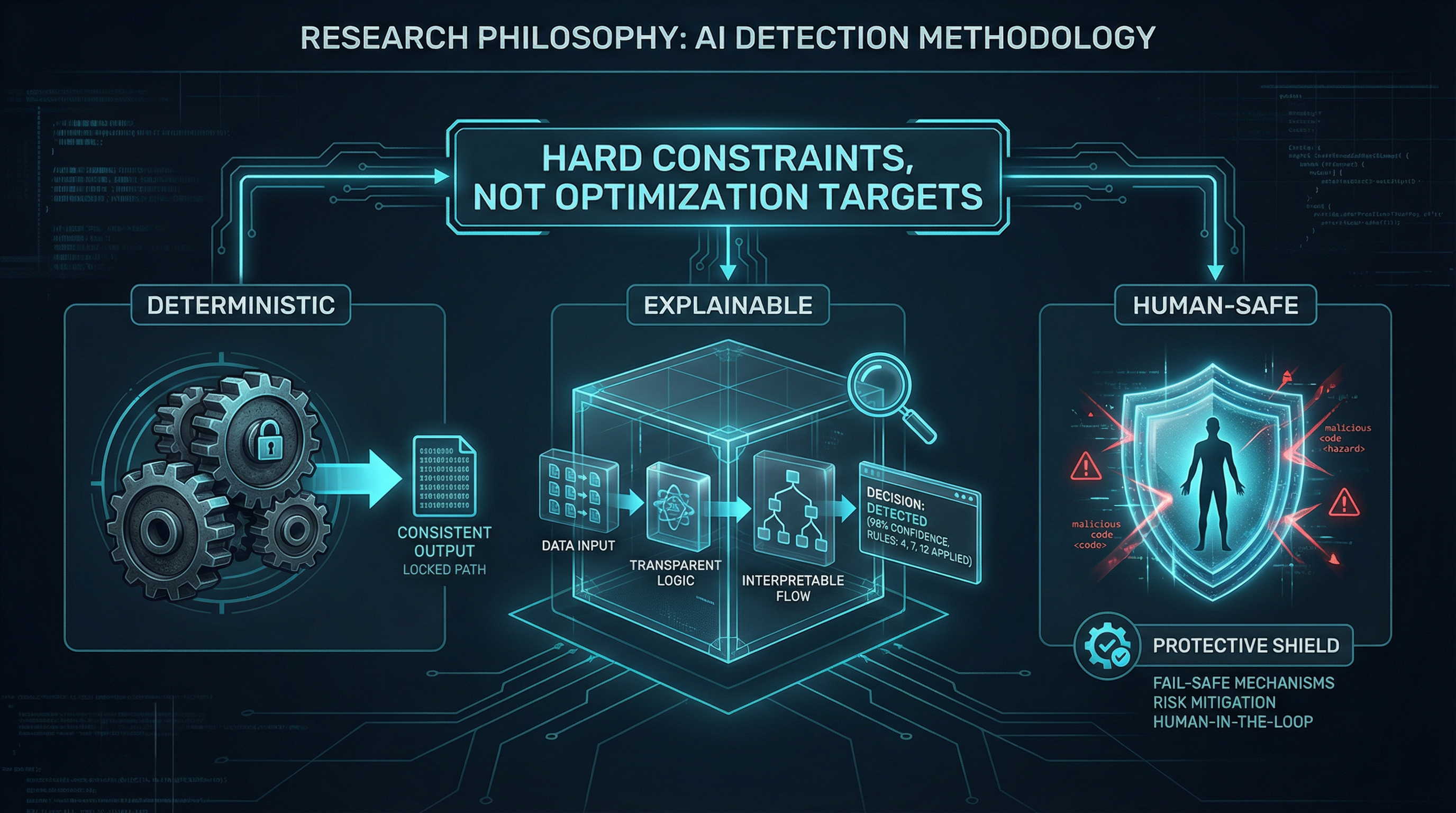

DetectX is built on the principle that forensic verification must be deterministic, explainable, and human-safe. These are not optimization targets—they are hard constraints that shape every design decision.

The research program prioritizes reliability over sensitivity. A system that occasionally misclassifies human work as AI-generated causes more harm than a system that occasionally fails to detect AI content. Human safety is non-negotiable.

Why Probability-Based Detection Fails

Most AI detection systems output probability scores: "87% likely AI-generated." These scores create several fundamental problems:

- •Threshold ambiguity: What does 87% mean? Is 60% enough to act on? Different users apply different thresholds, leading to inconsistent outcomes.

- •False precision: Probability scores imply a level of certainty that the underlying models cannot support. The number feels authoritative but is often arbitrary.

- •Reproducibility failures: Many probabilistic systems produce different scores on repeated analysis of the same content, undermining forensic credibility.

- •Human harm: When human-created work receives a high AI probability score, the creator faces an impossible burden of proof. The score becomes an accusation without recourse.

DetectX rejects probability-based outputs entirely. The system reports structural observations, not statistical inferences.

Human-Normalized Baselines

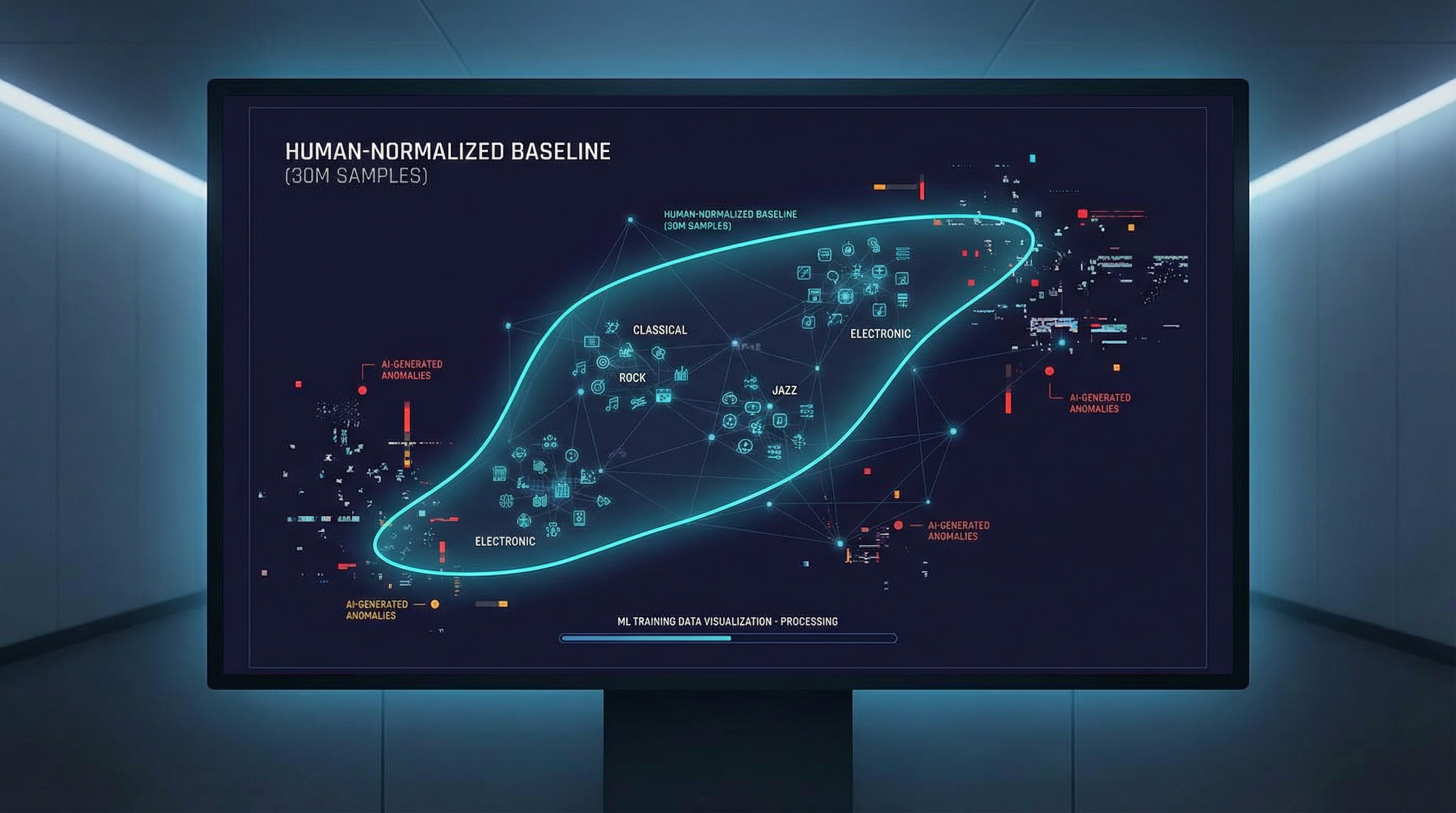

The foundation of DetectX verification is the human-normalized baseline: a reference model constructed exclusively from verified human-created content.

Baseline construction follows strict protocols:

- •All baseline content is verified human-created through provenance documentation

- •Baselines span diverse genres, production styles, and recording conditions

- •Baseline parameters are calibrated to minimize false positive risk

- •Baseline updates follow documented versioning and validation procedures

The baseline defines what "human" looks like in signal geometry terms. Content that falls within baseline parameters is reported as showing no AI signal evidence.

Dual-Engine Verification Approach

DetectX uses a dual-engine architecture that prioritizes human protection while maintaining effective AI detection:

Classifier Engine (Primary)

Deep learning classifier trained on 30,000,000+ verified human music samples. Optimized for <1% false positives. If the classifier says Human, the verdict is trusted immediately.

Reconstruction Engine (Secondary)

Activates when the Classifier Engine exceeds 90% threshold. Analyzes stem separation and reconstruction differentials to boost AI detection accuracy.

This approach ensures human creators are protected first. The Classifier Engine acts as a protective filter, while the Reconstruction Engine provides secondary verification for suspected AI content.

Determinism as a Forensic Requirement

Forensic systems must be deterministic. The same input must always produce the same output. This is not a preference—it is a requirement for any system whose results may inform consequential decisions.

DetectX achieves determinism through:

- •Fixed normalization pipelines with consistent preprocessing

- •Versioned models and baseline references with documented parameters

- •Reproducible analysis workflows that can be independently verified

- •Binary verdict outputs without probabilistic ambiguity

Failure Cases and Corrections

No verification system is perfect. DetectX acknowledges known limitations and maintains documented correction procedures:

- •Edge cases: Unusual production techniques may produce signal geometry outside baseline parameters. These cases are documented and baselines are updated accordingly.

- •Hybrid content: Content that combines human and AI elements may produce ambiguous results. The system reports what it observes without inferring intent.

- •Format artifacts: Extreme compression or format conversion may introduce signal artifacts. Minimum quality thresholds are enforced.

When errors are identified, baseline parameters are reviewed and updated through documented versioning procedures. All corrections are logged and traceable.

What DetectX Refuses to Do

Certain capabilities are intentionally excluded from the DetectX system:

- •Authorship determination: DetectX does not identify who created content. It reports structural observations only.

- •Model attribution: DetectX does not identify which AI system generated content. It detects structural anomalies, not model signatures.

- •Intent inference: DetectX does not infer creative intent, deception, or purpose. It reports signal geometry.

- •Legal conclusions: DetectX does not provide legal opinions or certifications. Results are technical observations for professional interpretation.

Ongoing Research Areas

Active research programs include:

- •Baseline expansion for additional audio genres and production contexts

- •Verification systems for image, text, and video content

- •Robustness testing against adversarial manipulation

- •Cross-modal verification for multimedia content

New modalities will be introduced only after they meet the same forensic reliability and human-safety standards as DetectX Audio.

DetectX exists to provide a forensic reference that can be trusted. The research program is guided by a single principle: verification systems must protect human creators, not endanger them.