Enhanced Mode: Dual-Engine Architecture Released

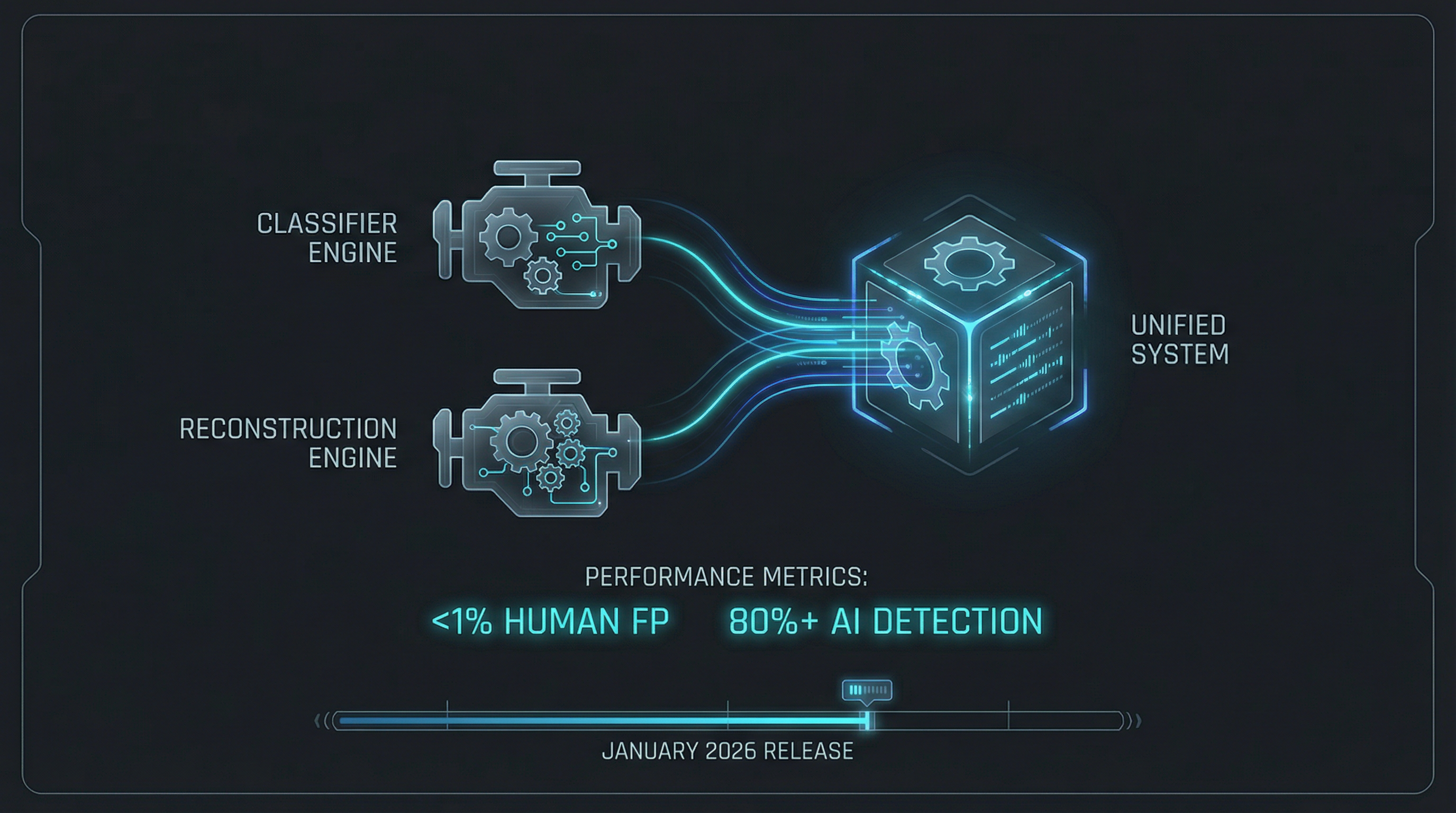

DetectX Audio now operates exclusively in Enhanced Mode, a dual-engine verification architecture designed to maximize human protection while maintaining effective AI detection.

Architecture Overview

Enhanced Mode combines two complementary engines working in sequence:

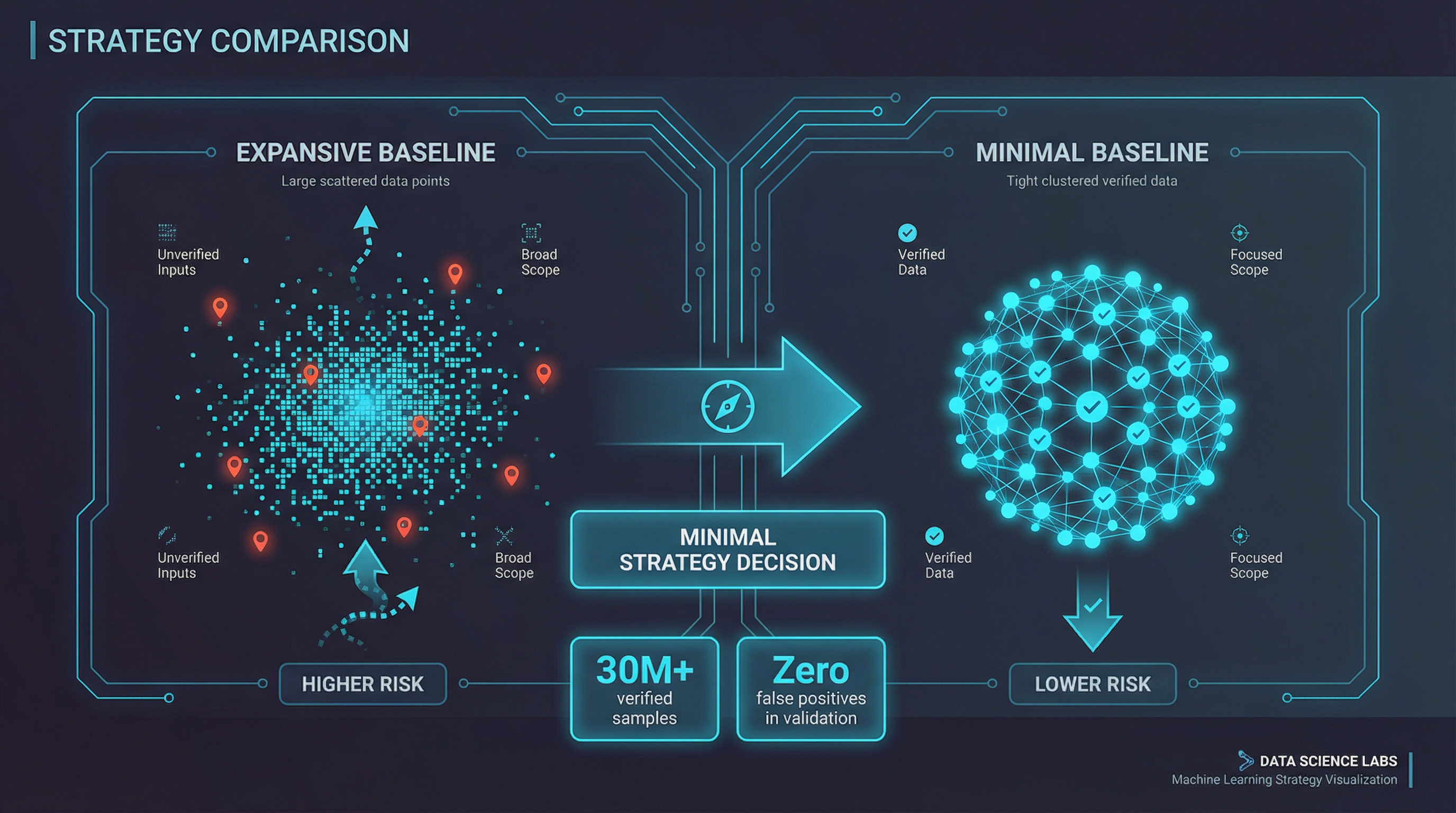

- •Classifier Engine (Primary): A deep learning classifier trained on over 30,000,000 verified human music samples. Optimized for near-zero false positives. If the Classifier Engine determines content is human, the verdict is trusted immediately.

- •Reconstruction Engine (Secondary): Activates when the Classifier Engine score exceeds 90% threshold. Analyzes stem separation and reconstruction differentials to boost AI detection accuracy.

Performance Characteristics

- •Human False Positive Rate: <1% — human creators are protected

- •AI Detection Rate: Strong detection for confirmed AI-generated content

- •Binary Verdicts: No probabilistic scores, only structural observations

Design Philosophy

The dual-engine approach prioritizes human safety as a hard constraint. By using the Classifier Engine as the primary filter, the system ensures that human creative work is never unfairly flagged. The Reconstruction Engine serves as a secondary check only when the primary classifier indicates potential AI content.

This update documents a system architecture change. Performance metrics are based on internal testing and may vary with different content types.